2025 Predictions

1. Kubernetes Security Challenges

Spoiler, Kubernetes security has been my primary focus for almost two years. I've seen many security challenges when dealing with Kubernetes and cloud native infrastructure.

From speaking to hundreds of people about these challenges, it's clear that there needs to be more offensive security research in the space that can be used to inform defense. Logging is hard and incident response is downright painful. As Kubernetes adoption increases, so will the demand for experts in Kubernetes security to answer difficult questions. As of 2023, 84% of non-cloud companies were using or evaluating Kubernetes (66% and 84% respectively).

I'm reminded of Active Directory before and after SpectorOps released Certified Pre-Owned. It opened Pandora's box and showed that seemingly innocuous services such as Active Directory Certificate Services could be leveraged by attackers. The Cloud Native landscape likely has similar flaws, we just haven't found them yet. And by we, I mean the community, I'm confident nation-state actors are actively picking apart these open source tools and have been for years.

Offensive security plays an important role in this. The more offensive security professionals are looking for ways to exploit Kubernetes and cloud native technologies, the safer the technologies are.

2. People Led Brands

The most trustworthy brands are not brands in the typical sense, they're people. Who do you trust more?

- Dave Kennedy or TrustedSec?

- John Strand or Black Hills?

- Your SANS instructor or SANS?

- A company blog post on a CVE or a Twitter user with an anime profile picture posting a text file called

vuln_writeup.txt?

This works well with the Federated Security Training Model I proposed before. Security work depends on trust and it's easier to build trust with a person, not a brand. Recommended reading: People Are The New Brands

3. Discovery of Malicious Browser Extensions

In late December of 2024, a small browser extension compromise snowballed into a few security researchers finding that at least 33 browser extensions had been compromised. Browser extensions are powerful and I think there are many more malicious ones out there that have been operating for years, we just haven't looked deep enough. @Tuckner has been leading this space.

4. Managed Security Tooling (as a service)

Open-source security tooling is wonderful, but not all companies have the time/money/personnel to deploy open-source tools and ingest them into actionable dashboards. The leader in this space is ProjectDiscovery, which publishes incredible tooling including: Nuclei, naabu, and subfinder, but also offer a sleek web-based platform that utilizes these tools as a managed security tool.

Low Orbit Security: Gubble Released

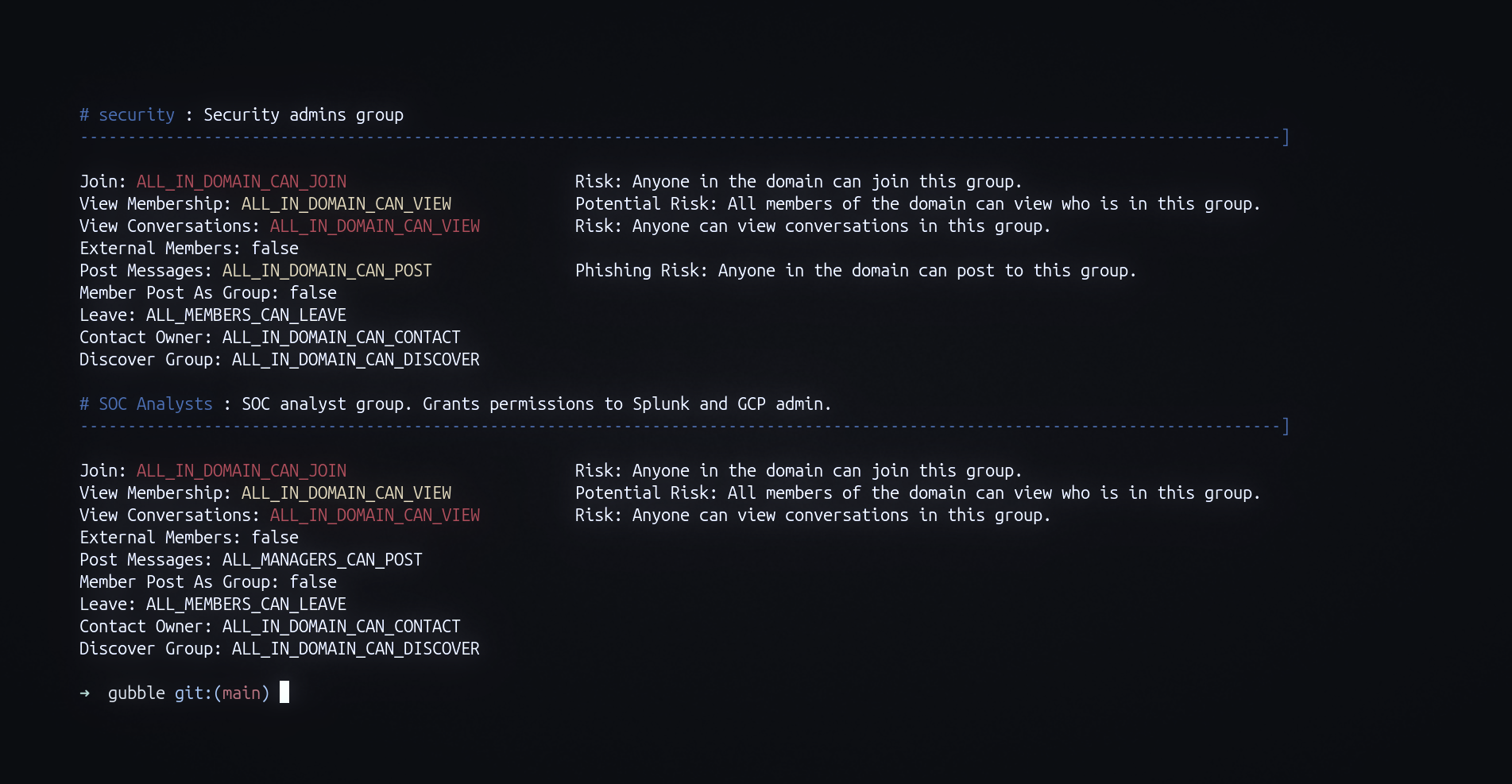

This week, I released the open-source tool gubble. Gubble is a tool that queries the Google Workspace API to analyze Group permissions, allowing both offensive and defensive teams to programmatically identify risky permissions.

In this example, everyone in the domain can view the conversations of the HR Group.

Gubble finds an overly permissive group like an HR that exposes highly sensitive information, or a "SOC analysts" group that anyone in the domain can join.

If you use Workspace, check it out to quickly scan your Google Groups, allowing you to easily identify risky misconfiguration.

The AI Sugar Crash

I've been experimenting with AI for coding recently. The conclusion I've come to is that it's like sugary candy. If used in moderation, it can be enjoyable and make your life better. If used in excess, it will make you feel great short term, but further down the line, you'll typically regret it. This is typically apparent when working on programs that have somewhat complex behavior.

I've overindulged and will be using AI tools less going forward even though they've helped me. Why? For most projects, I care less about simply getting something to work than I do about understanding it fully.

Some areas I have found AI useful for are:

- Extracting text from images: When taking a course, uploading a screenshot of the code on the slides and being able to paste the code directly in my notes is much more useful than pasting an image.

- Quick proof of concepts: Need to quickly write a Python script that does something basic or generate a quick YAML manifest? AI tools are great for this.

Caught My Eye

- Nuclei Signature Bypass: The finding is interesting. What's interesting is Wiz is using Nuceli under the hood for their tooling. I know many other vendors use this, but it's still surprising.

- Fuzzing-lab: UCLA's Cyber Fuzzing Labs

- How I’m trying to use BlueSky without getting burned again Interesting writeup about the role of social media with the thesis being direct people to spaces you control, don't rely on social media platforms to maintain those spaces/connections for you.

- Go-Installer: A bash script to install the latest version of Go. (I reviewed the code and then forked it because I'm paranoid, it would be a great vector for supply chain compromise). Can install go with

bash <(curl -sL https://raw.githubusercontent.com/LowOrbitSecurity/go-installer/refs/heads/master/go.sh) - Go-out: Nifty tool for testing egress.

- NFS Security: Identifying and Exploiting Misconfigurations: A dive into security features and attacks of NFS.

- The code monkey’s guide to cryptographic hashes for content-based addressing: A wonderful guide on hashes.

- Collection of insane and fun facts about SQLite: Self-explanatory. 24 unhinged facts about SQLite.

- People Are The New Brands: Mentioned Above. Great about branding (not security-related)

- The Anti-EDR Compendium: Deep dive into EDR attacks and detection methods.